- AI @chatgptricks

- Posts

- The #1 AI Meta Skill

The #1 AI Meta Skill

Most people get this wrong...

Wish AI would give you a perfect result on the first prompt every time?

Then today’s issue is for you.

As you likely know, working with AI involves managing both disappointment and frustration on a daily basis. Mainly as it relates to pouring 5, 10, or 15 minutes into a prompt only to get a subpar (if not completely disastrous) response.

To address this, many people spend serious time perfecting their prompts, going back and forth with the AI, etc. But what if I told you there’s a way to get a near-perfect output on the very first attempt?

If that sounds like something you might be interested in, keep reading.

ChatGPT is Not Google

What you have to understand about AI is that if you're using it on a frequent basis, there's a high likelihood you've developed habits you don't even realize.

Examples include prompting a certain way, not paying attention to which model you're using, and even the way you react after the AI responds to your first request.

And because of that, using AI to its full potential is a skill (as opposed to using something like Google, which requires no skill whatsoever).

And similar to learning how to golf, play the guitar, etc., it’s easy to develop bad habits as a beginner. Especially when it comes to repetitive mistakes you don’t even realize you’re making.

So in today's issue, I thought I’d explain a strategy that - if you use it - can help you get higher quality outputs with less effort and time wasted.

Two Strategies, One End Goal

Before we define the difference between prompt testing and prompt engineering, we need to accept that our goal is to get a high-quality result on the very first attempt.

I emphasize this because the two strategies below appear so similar you might think the only difference between them is semantics.

In reality, however, they are two very different (and very strategic) methods for achieving the same outcome.

The Problem with Prompt Engineering

Prompt engineering attempts to get a high-quality response by having the user write highly detailed (and in some cases extremely long) prompts. The logic here is that, because of their length and comprehensiveness, they should generate high-quality outputs with just one submission.

It's a legitimate strategy, and one that I recommend everybody use.

The problem (at least in my opinion) is that prompt engineering puts too much emphasis on the first prompt being perfect. The entire philosophy is based on “one-shotting” the AI, with no real strategy for what to do if your super prompt delivers a sub-par response.*

*Besides going back, perfecting it, and then trying it again.

The problem is, if you pour a bunch of time and energy into writing the perfect prompt - and still get a subpar result - it can be difficult to identify where you went wrong. Especially as it relates to figuring out what you need to fix next time.

The easier (and more effective) approach?

Prompt Testing

If you remember the scientific method from school, you remember the idea of developing a hypothesis, isolating the variables, and then running your test.

Well, guess what?

When it comes to prompting an AI, we can do the same thing.

Unlike prompt engineering, prompt testing involves:

Identifying one or more aspects of your prompt (that you believe could have a dramatic impact on the output)

Developing alternative versions (known as variants in the testing world)

Testing both versions against each other at the same time

As an example, I do a lot of copywriting using AI.

Isolate Your Variables

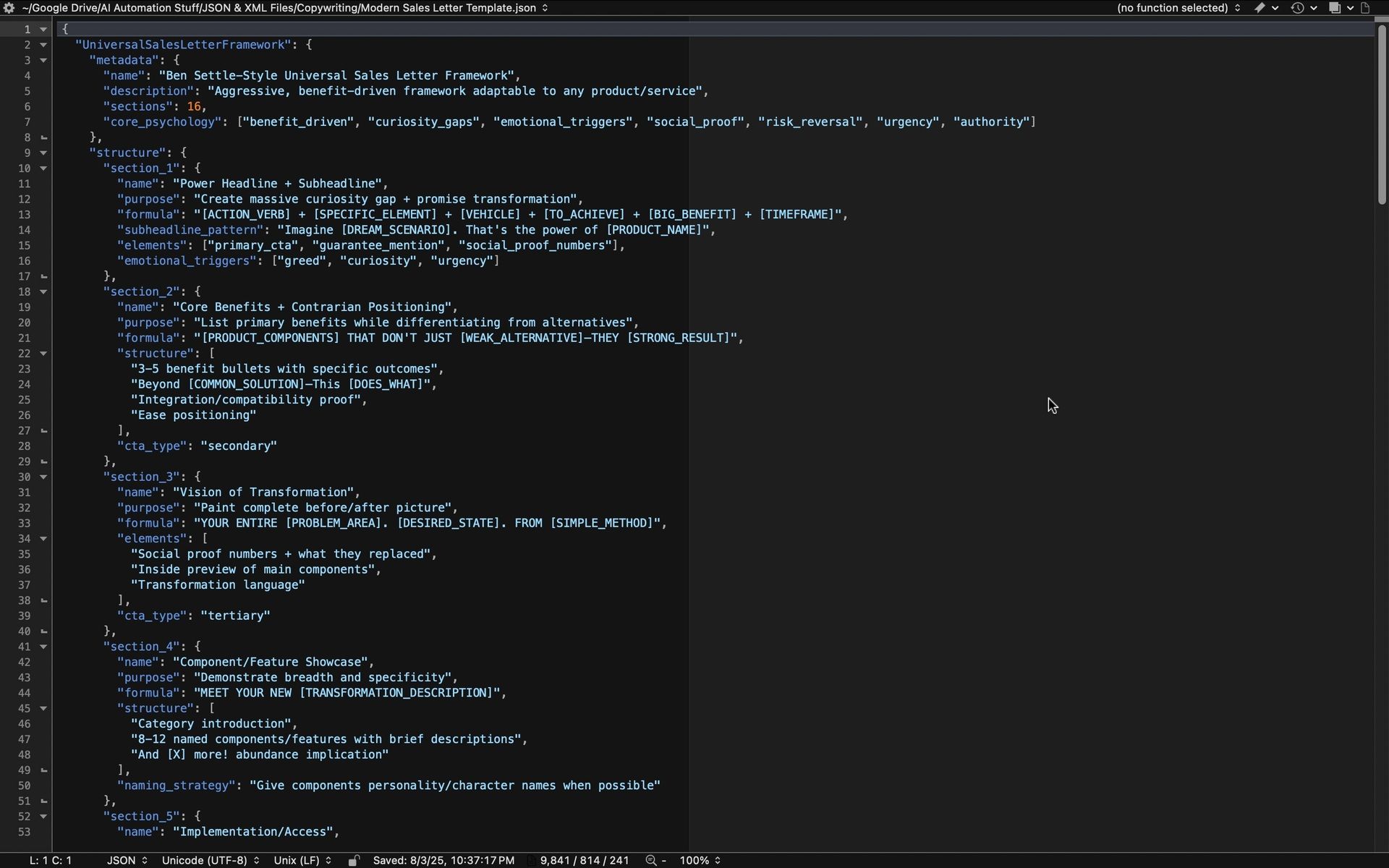

While I’ve had success using an XML sales letter framework, I noticed the outputs I got were way too rigid. They followed the framework way too closely for something as fluid and creative as copywriting.

So, rather than attempt to fix the issue through prompt engineering, I tested a PDF version of the sales letter framework instead of an XML version.

While this might not sound like a dramatic test, the way the AI processes a text document is dramatically different from how it processes a set of instructions delivered in XML or JSON format.

And sure enough, the sales letter I got as a result of using the PDF framework was dramatically more in line with what I was looking for.

And that's just one example.

You Can Test Most Anything

It doesn't matter if you're testing the prompt itself, the attachments you include as part of your prompt, the model that you're using, etc. Every aspect of the prompting process can be isolated and tested to identify which inputs generate the highest quality output.

So if you're struggling with this AI stuff, I recommend you take a more scientific approach to your prompting.

While getting a high-quality output on the first attempt might not sound like a big deal, if you're a power user, odds are you're submitting hundreds of prompts per week.

Prompt Testing = A Massive Time Saver

And if you're constantly having to go back and forth to get the output you actually want, you could end up wasting multiple hours per week (and 100+ hours per year).

Is this stuff super dorky? Yes!

But if you plan on using AI for the next couple of decades, this is a skill that could save you literally thousands of hours over the next 20-40+ years.

And as much as we love this nerdy AI stuff, I think it's fair to say most of us want to spend as little time as possible in front of our screens.

*Interested in learning more about how to leverage advanced prompting to streamline your daily work tasks?

Make sure to follow me on X…

And join Sentient’s brand-new AI Automations members community (it’s free : )

Catch you next time,

Louis & Ivan

ChatGPTricks Post of the Week:

What did you think?

Did you enjoy this newsletter blast? |

Want to connect with us? Send us a DM!